Through a series of panels, the AI Governance Virtual Symposium seeks to promote discussion around the proper institutional and legal framework for the development and use of artificial intelligence. The series examines (potential) governance structures for artificial intelligence at various levels—from local to global. The AI Governance Virtual Symposium is co-hosted by the Georgetown Institute for Technology Law & Policy and the Yale Information Society Project. Antoine Prince Albert III, Heather Branch, Hillary Brill, Anupam Chander, April Doss, Niklas Eder, Nikolas Guggenberger, Daniel Maggen, Keshav Raghavan, Eoin Whitney, and Kyoko Yoshinaga have contributed to the programming and the materials.

AI Governance Virtual Symposium: Exploring AI Accountability Policy with Russ Hanser

(June 29, 2023)

Co-hosted by Yale–Wikimedia Initiative on Intermediaries and Information (WIII) & Georgetown Institute for Technology Law & Policy

The AI Governance Virtual Symposium welcomed Russ Hanser, NTIA Associate Administrator for Policy Analysis and Development, for a discussion about AI accountability efforts. NTIA/Department of Commerce has requested public comment on AI accountability policy and will produce a report on how to build a more robust AI accountability ecosystem.

Guest Speakers: Anupam Chander, Scott K. Ginsburg Professor of Law and Technology, Georgetown Law

Nikolas Guggenberger, Assistant Professor, University of Houston Law Center

Mehtab Khan, Director, Wikimedia Initiative at the Yale Information Society Project

Neel Sukhatme, Anne Fleming Research Professor; Professor of Law,

Georgetown Law Moderator: Natalie Roisman, Executive Director, Georgetown Law Institute for Technology Law & Policy

AI Governance Symposium: Perspectives on Algorithmic Accountability with Senator Ron Wyden

(March 3, 2023)

Co-hosted by Yale–Wikimedia Initiative on Intermediaries and Information (WIII) & Georgetown Institute for Technology Law & Policy

In the last session of Congress, Senator Ron Wyden (D-OR), Senator Cory Booker (D-NJ), and Representative Yvette Clarke (D-NY-9) introduced S.3572, the Algorithmic Accountability Act of 2022, intended to increase transparency and bring new government oversight of software, algorithms, and other automated systems. The legislation was an update of the 2019 Algorithmic Accountability Act. The AI Governance Symposium hosted Senator Wyden on campus to discuss concerns about algorithmic bias and the future of algorithmic accountability legislation.

Guest Speaker: Senator Ron Wyden, D-OR Expert Panel: Paul Ohm, Professor of Law; Chief Data Officer; Georgetown University Law Center

Nikolas Guggenberger, Assistant Professor; The University of Houston Law Center

Dennis Hirsch, Professor of Law; Director, Program on Data and Governance; Ohio State University

Kyoko Yoshinaga, Non-Resident Senior Fellow of Georgetown Law’s Institute for Technology Law & Policy

Mehtab Khan, Resident Fellow and the lead for the Yale/Wikimedia Initiative on Intermediaries and Information

AI Governance Virtual Symposium: Brazil's Draft AI Bill

(February 3, 2023)

Co-hosted by Yale–Wikimedia Initiative on Intermediaries and Information (WIII) & Georgetown Institute for Technology Law & Policy

For this session of the AI Governance Virtual Symposium, we hosted the two ranking members of Brazil’s expert committee on AI regulation. The Senate-appointed committee held numerous public hearings and received input from industry, civil society, and academics. The draft bill it prepared as a result of that process covers transparency, copyright, discrimination, and individual rights. It seeks to learn from proposals in the U.S. and the European Union to design a model of its own that is fit for the social and legal reality in Brazil. Our panelists explored those questions from different perspectives.

Guest speakers: Justice Ricardo Cueva, Superior Court of Justice, Brazil

Laura Schertel Mendes, Senior Visiting Researcher at the Goethe-Universität Frankfurt am Main Interviewers:

Anupam Chander, Scott K. Ginsburg Professor of Law and Technology, Georgetown Law

Marcela Mattiuzzo, Visiting Fellow, Information Society Project

Moderator: Artur Pericles, Wikimedia Fellow at the Information Society Project, Yale Law School

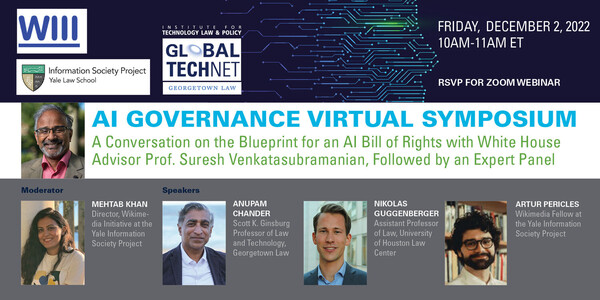

AI Governance Virtual Symposium: Blueprint for an AI Bill of Rights

December 2, 2022

Co-hosted by Yale–Wikimedia Initiative on Intermediaries and Information (WIII) & Georgetown Institute for Technology Law & Policy

Guest Speaker: Suresh Venkatasubramanian, Professor of Computer Science and Data Science, Brown University

Moderator: Mehtab Khan, Program Director, WIII, Information Society Project, Yale Law School

Panelists:

Anupam Chander, Scott K. Ginsburg Professor of Law and Technology at Georgetown University

Nikolas Guggenberger, Assistant Professor of Law, University of Houston Law Center

Artur Pericles L. Monteiro, Wikimedia Fellow, Information Society Project, Yale Law School

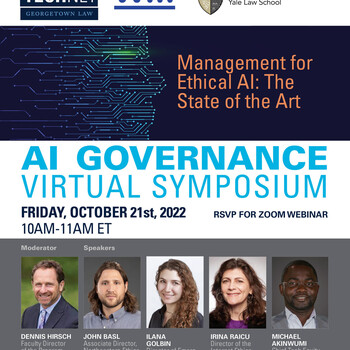

AI Governance Virtual Symposium: Management of Ethical AI: The State of the Art

October 21, 2022

Co-hosted by Yale–Wikimedia Initiative on Intermediaries and Information (WIII) & Georgetown Institute for Technology Law & Policy

Moderator: Dennis D. Hirsch, Professor of Law, The Ohio State University Moritz College of Law

Panelists:

John Basl, Associate Director, Northeastern Ethics Institute

Ilana Golbin, Director of Emerging Technology & AI, Global Responsible AI Leader, PwC

Irina Raicu, Director of the Internet Ethics program, Markkula Center for Applied Ethics

Michael Akinwumi, Chief Tech Equity Officer, National Fair Housing Alliance

AI Governance Virtual Symposium: A Conversation With Max Schrems

October 7, 2022

Co-hosted by Yale–Wikimedia Initiative on Intermediaries and Information (WIII) & Georgetown Institute for Technology Law & Policy

Guest speaker: Max Schrems, Founder, NOYB, European Centre for Digital Rights

Panelists:

Anu Bradford, Henry L. Moses Distinguished Professor of Law and International Organization, Columbia Law School

Anupam Chander, Scott K. Ginsburg Professor of Law and Technology at Georgetown University

Nikolas Guggenberger, Assistant Professor of Law, University of Houston Law Center

Artur Pericles L. Monteiro, Wikimedia Fellow, Information Society Project, Yale Law School

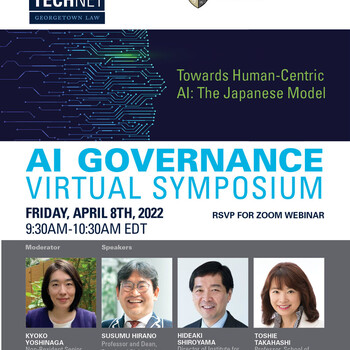

AI Governance Virtual Symposium: Towards Human-Centric AI: The Japanese Model (April 8, 2022)

Co-hosted by ISP & Georgetown Institute for Technology Law & Policy

Panelists:

Susumu Hirano, Professor and Dean, Faculty of Global Informatics, Chuo University

Hideyaki Shiroyama, Director of Institute for Future Initiatives, Professor of Graduate School of Public Policy, The University of Tokyo

Toshie Takahashi, Professor, School of Culture, Media and Society/ the Institute for AI and Robotics, Waseda University

Moderator: Kyoko Yoshinaga, Non-Resident Senior Fellow, Institute for Technology Law and Policy at Georgetown Law Center

Summary:

Today’s Panel on Human Centric AI in a Japanese Context moderated by Georgetown’s Kyoko Yoshinaga saw a rich panel of experts in Public Policy, Media, Law and Sociology commenting on Japan’s ini

tiatives in AI governance. The Panel covered a wide range of subjects from Japan’s role in shaping the global AI regulation regime to the human perception to robotics and their fears over it.

The Panel began with comments from Professor Hideyaki Shiroyama, Director of Institute for Future Initiatives, Professor of Graduate School of Public Policy, The University of Tokyo. Professor Shiroyama divided his talk under two major heads. First, the International Strategy, which dealt with use of the various global forums by Japan such as the OECD and G20 to develop, share and propagate the nation’s vision for AI governance. He mentions the existence of Beijing AI principles and also draws on comparative data between Japan, Finland and Ireland on the question of familiarity with robotics in a care setting. He expressly added that Japan’s AI policy was and is being developed against the backdrop of its aging population and the prevailing culture. And that is reflected in all spheres including its treatment of robotics where robotics is not just treated as a human tool but an individual entity in itself. Second, is the culture aspect, which looked closely at the relationship between humans and AI, thus curating a list of seven basic principles that society should attend to in order to utilize completely without fearing for a social imbalance. The highlight of Professor Shiroyama’s presentation was an interesting image of a political campaign in Japan. Where a poster was exhibited with pictures of two human candidates and a third robot, which was apparently the way the third candidate wanted to present himself. As a neutral, transparent entity without the vices of human nature!

Next in the speaker line up was Professor Susumu Hirano, Professor and Dean, Faculty of Global Informatics, Chuo University. Professor Hirano has spent time on the Japanese Cabinet as well as Ministry of Internal Affairs and Communications. He spoke of his experience of directly shaping and contributing to numerous soft laws (i.e., guidelines and principles) for AI under the Japanese Government. The Japanese goal was to create a list of principles that would be globally applicable to the AI regime. These principles he believes should be akin to OECD’s privacy guidelines widely adopted worldwide. He adds that the OECD has in fact prepared their own principles on Japan’s insistence and those reflect Japanese guidelines and principles in them. He believes that global collaboration and interoperability is desirable for an efficient AI regime. The focus of his speech and work seemed to be on soft law as the basis of Japan’s current AI governance. Soft law in the form of directions and regulations, co-created with industry members are adherent to social principles drafted for human centric AI. And it is these principles that are embraced by schools, Government Organisations, and Corporations alike.

The third speaker was Professor Toshie Takahashi from the School of Culture, Media and Society/the Institute for AI and Robotics, Waseda University. Her work is premised on the broad question of how it may be possible to place human happiness at the center of AI governance. She explains that narratives of AI in Japan differ from Western narratives and the dichotomy between both are brightly visible. While Japan stands on a utopian front, the West embraces a more dystopian one in so far as its AI narrative is concerned. Professor Takahashi however is focused on all the positives that AI can add to society, such as new avenues of diversity and inclusion. She believes it can also help in fostering transnational dialogue between Japan and beyond. However, she cautions against the use of AI to increase discrimination and potential for addiction and overdependence. While acknowledging the potential for chaos, she adds that AI society has to prioritize sustainability of human society. She believes that we now have the potential to achieve our Sustainable Development Goals but for that we must move from AI/Nation first to human first motto. She fears the gap between natural and social sciences in AI research and suggests that we should create AI for good together using a cross-disciplinary approach. She speaks of her two projects that seek to eliminate it. The first being, ‘A Future with AI’, in collaboration with the United Nations, which focuses on the response of children and young adults to AI. And the other, being ‘Project GenZAI’ (meaning ‘now’) which explores ways of making human prioritizing AI through the lens of Generation Z.

The Panel was richly packed with information and the last leg had three major questions from the moderator Yoshinaga. 1) Will soft law operate well in regulation of AI? 2) What are the differences between Japan and EU’s human centric AI approaches, 3) What are the cultural progresses that the Japanese AI regime has seen?

In response to the first, Professor Hirano stated with firmness that the soft law is indeed the way to go since it is co-created by the Government and the corporations together [along with academics and consumer groups’ representatives] (which is the so-called “multi-stakeholders’ approach”). Thus naturally, the corporations are willing to adhere to what they have set out for themselves. In fact, he believes that hard law may just be more a bane than a boon in these circumstances. The second question was answered by Professor Shiroyama who pinned the difference between EU and Japan on the choice of hard law (by EU) v. the choice of soft law by Japan. He also added the difference in cultures as both regimes of human centric AI are deeply embedded within their own culture. And finally, Professor Takashi spoke of the rise of robots and techno-animism in Japanese culture. She cited statistics to claim that people in Japan have accepted AI but they do not want robots to look like them, neither do they want AI to behave impulsively akin to their human counterparts on occasion. The panel closed with all speakers speaking of Japan’s contribution to the global discourse and Professor Hirano’s specific emphasis on Japan’s history as a harmonious legal regime which can weave harmony between different national legal and regulatory structures around the world.

Summary by Esha Meher, ISP Student Fellow

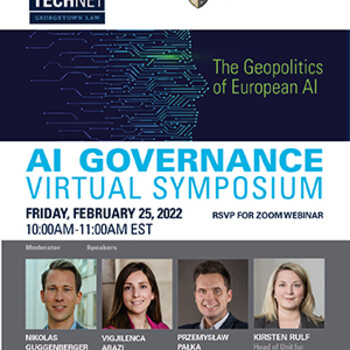

AI Governance Virtual Symposium: The Geopolitics of European AI (February 25, 2022)

Co-hosted by ISP & Georgetown Institute for Technology Law & Policy

Panelists:

Vigjilenca Abazi, Assistant Professor of European Law at Maastricht University

Przemyslaw Palka, Assistant Professor of Law at Jagiellonian University

Kirsten Rulf, Head of Unit for Digital and Technology policy and Nerd-in-Chief at Federal Chancellery of Germany

Moderator: Nikolas Guggenberger, Executive Director of Yale Information Society Project

Summary:

Following the panel on “The Geopolitics of Chinese AI,” this session discussed Europe’s role in the world of AI : both in terms of software development and creating a thriving regulatory framework around it. The key instruments under spotlight were the European Commission’s Artificial Intelligence Act proposed in April 2021 and the General Data Protection Rules (GDPR) of 2018. The panel was moderated by Yale ISP’s Executive Director, Nikolas Guggenberger and featured Vigjilenca Abazi, an Assistant Professor of European Law at Maastricht University, Przemyslaw Palka, an Assistant Professor of Law at Jagiellonian University, and Kirsten Rulf, Head of Unit for Digital and Technology policy who also uses the designation of ‘Nerd-in-Chief’ at the federal Chancellery of Germany!

The conversation began with an expression of solidarity with the people of Ukraine before Vigjilenca Abazi opened the panel in response to the moderator’s question regarding the future and direction of European AI today. She emphasized on the twin issues of compliance and transparency that were at the heart of EU’s AI regulatory framework and noted the mismatch between the spirit and letter of the law. She referred to pre and post market assessment in determination of risk and definitional vagueness to mention the drawbacks of Europe’s multi tiered regulatory regime.

Prezemyslaw Palka joined right after and added that he was skeptical of the AI Act’s ambition of governance in the market. He was particularly apprehensive of the horizontal regulation, which divides AI systems by risk thus making rules apply only for “high risk” regulations. Yet the irony of fact remained at this “high risk” being an undefined component in the legislation. Later in the discussion, Palka reiterated that it is untrue or perhaps inaccurate to say that regulation is bad for the Internet. But it is bad regulation, like the AI act which is bad for the Internet as it is long and expensive but shies away from making regulatory choices. Most crucial questions pertaining to risk are left to the market and consequently it is private parties and law firms who define and answer much of this component. Unlike its American counterpart, which cares about the effect of regulation, Europe places a disproportionate blame on enforcement issues while considering the social landscape as an ideal one.

Kirsten Rulf offers a rich insight on the social and political aspects behind the regulatory regime’s success and failure. She adds that the oscillatory views on AI see it either as a silver bullet or a dangerous and discriminatory tool. But this is not to say that people shy away from using AI on their personal devices, thus bringing many more cultural and policy challenges than strictly legal ones. One aspect of the act could just be to ‘signal’ to its citizens. Much like the GDPR, the AI Act too is a geopolitical tool as opposed to a legal one. Noting that the Trade Technology Council, Negotiations on EU-US Privacy Shield and other forum discussions have been lying cold for years, this is the EU’s political move to control the narrative. Responding to Palka, she wonders aloud, if it is indeed a flaw to put decisions on the market or a design principle.

The questions of extraterritoriality of the GDPR, also known as the Brussels effect, was discussed squarely by Abazi and parallels between the concept of privacy in EU law today and that of human rights globally 20 years ago were drawn. She said that the modern European stance of not signing trade agreements till privacy compliances were achieved were akin to the human rights compliance demands in the yesteryears. Other critical questions posed to panelists included regulatory pace (is AI developing fast enough to capture and respond to social nuances) and finally, how much the AI act materially regulates the scape since much of it is already addressed by Art. 22 of the GDPR which were addressed in the final segment.

Summary by Esha Meher, ISP Student Fellow

AI Governance Virtual Symposium: The Geopolitics of Chinese AI (January 21, 2022)

Co-hosted by ISP & Georgetown Institute for Technology Law & Policy

Panelists:

Simon Chesterman, Dean of the Faculty of Law at National University of Singapore and Senior Director of AI Governance at AI Singapore

Jeffrey Ding, Postdoctoral Fellow at Stanford's Center for International Security and Cooperation

Samm Sacks, a Senior Fellow at Yale Law School Paul Tsai China Center & New Americ

Kendra Schaefer, Partner and Head of Tech Policy Research at Trivium China Samm Sacks, Senior Fellow at Yale Law School Paul Tsai China Center & New America

Moderator: Anupam Chander, Scott K. Ginsburg Professor of Law and Technology, Georgetown University

Summary:

In this AI Governance Virtual Symposium Series session, Professor Anupam Chander mediated a discussion between Simon Chesterman, Jeffrey Ding, Kendra Schaefer, and Samm Sacks.

Professor Chander started by posing the following puzzle: if the US and China are in the midst of a geopolitical race to become the world's AI superpower, why are both of them cracking down on big tech? Recent Chinese regulations on data and algorithms caused major Chinese internet companies to lose over $1 Trillion in market value.

The discussion started with Kendra Schaefer, Partner and Head of Tech Policy Research at Trivium China, analyzing the recent wave of Chinese regulations directed at tech companies. These include data security, antitrust, IPOs, online content moderation, gaming, and education. To understand the extent and breadth of this wave, Schaefer discussed the regulation of recommendation algorithms, which recently came into force, calling it a "world-first" and an "example for Europe and the United States." She argued that while some of these rules, such as those that require algorithms to promote "positive feelings" and "mainstream values," are specific to China's legal and political system, many others, such as those aimed to protect privacy, battle internet addiction, and increase user control, address nearly universal concerns.

The reason why the regulations of AI may make sense even from a market perspective, suggested Jeffrey Ding, Postdoctoral Fellow at Stanford's Center for International Security and Cooperation, is because they are meant to promote AI development that is sustainable and legitimate. If one thinks of AI diffusion in the entire economy as a "long game" taking decades, then thoughtful and publicly salient regulation can be a "first step towards more sustained acceptance and more sustainable development of AI in China."

Samm Sacks, a Senior Fellow at Yale Law School Paul Tsai China Center & New America, argued that the recent wave of regulation has its root in the Cyber Security Law passed in 2017. These laws and regulations exemplify a core tension between the government's goal of increasing its control over data and the internet and maintaining the growth of the digital economy. This tension can be witnessed in the debates between Chinese agencies that are more security-focused and those that are more oriented toward the global economy.

Simon Chesterman, Dean of the Faculty of Law at National University of Singapore and Senior Director of AI Governance at AI Singapore, argued that the recent Chinese wave of regulations is motivated by an amalgam of at least three different goals. First, there is the political need to reign in technology companies because they "were getting a bit too powerful," and a "bit too ahead of the curve in term of their politics." The second goal is to promote social goals. An example of that is the limits on the number of hours children can play computer games or the crackdown on the for-profit after-school education system. Finally, there are purely economic reasons. What is striking, Chesterman suggests, is that China decided that promoting certain social and political goals is worth the loss of a trillion dollars in market value.

Summary by Gilad Abiri, ISP Visiting Fellow

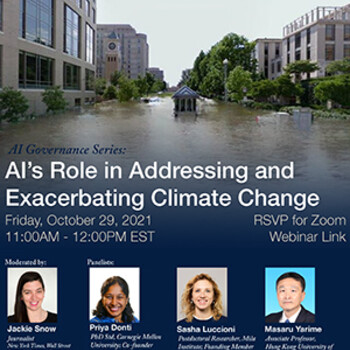

AI Governance Virtual Symposium: AI's Role in Addressing and Exacerbating Climate Change (October 29, 2021)

Co-hosted by ISP & Georgetown Institute for Technology Law & Policy

Panelists: Priya Donti, Chair of Climate Change AI,

Sasha Luccioni of the Mila Institute and Co-Founder of Climate Change AI

Professor Masaru Yarime of the Hong Kong University of Science and Technology

Moderator: Jackie Snow, Journalist, New York Times, Wall Street Journal, National Geographic and others

Summary:

In this installment on the AI Governance Virtual Symposium Series, journalist Jackie Snow mediated a discussion between Priya Donti, Sasha Luccioni, and Masaru Yarime on the role of Artificial Intelligence in addressing and exacerbating climate change.

Priya Donti, Chair of Climate Change AI, surveyed the different ways AI applications can be used to mitigate climate change and support climate action, either by reducing greenhouse gas emissions or adapting to the results of climate change. These applications include information gathering, forecasting, improving operational efficiency, performing predictive maintenance, accelerating scientific experimentation, and approximating time-sensitive simulations. At the same time, AI can be used in systems that directly or potentially increase greenhouse gas emissions, such as emission-intensive industries, in addition to the substantial amounts of energy consumed by AI systems themselves.

Sasha Luccioni of the Mila Institute and Co-Founder of Climate Change AI discussed the “This Climate Does not Exist” project. In this project, AI is used to generate images that simulate the appearance of climate events in user-chosen locations. As research suggests, by bringing these effects closer to home, such AI-produced personalized imagery can help viewers better realize the urgency of climate change.

Professor Masaru Yarime of the Hong Kong University of Science and Technology noted the increasing involvement of AI systems in climate change-related technologies. After surveying these involvements, including promoting energy efficiency in information and communication systems, industry, transportation, and the household, Professor Yarime discussed how these use cases could fit into existing AI governance regimes.

Summary by Daniel Maggen, ISP Visiting Fellow

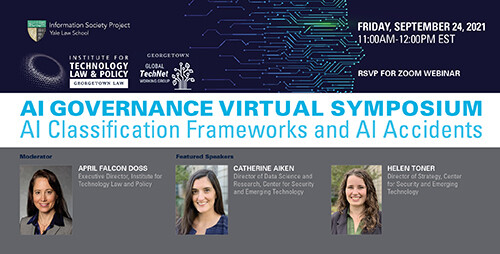

AI Governance Virtual Symposium: Classifying AI Systems and Understanding AI Accidents (September 24, 2021)

Co-hosted by ISP & Georgetown Institute for Technology Law & Policy

Panelists: Catherine Aiken, the Director of Data Science and Research at CSET

Helen Toner, Director of Strategy at CSET,

Moderator: April Falcon Doss, Executive Director of the Georgetown Institute for Technology Law and Policy

Summary:

In this semester’s first session of the AI Governance Virtual Symposium, moderated by April Falcon Doss, Executive Director of the Georgetown Institute for Technology Law and Policy, Catherine Aiken and Helen Toner from Georgetown’s Center for Security and Emerging Technology (CSET) presented their work on AI classification and AI accidents.

Catherine Aiken, the Director of Data Science and Research at CSET, discussed CSET’s work on developing AI classification frameworks for policymakers. As a general-purpose technology, different AI systems can have different meanings and implications for various regulatory frameworks. Responding to the challenge AI’s multifacetedness poses to policymaking, the CSET, in collaboration with the OECD, seeks to develop a user-friendly framework to classify AI systems uniformly along policy-relevant dimensions. To be successfully employed by policymakers, classifications need to be readily usable and understandable, characterize elements most relevant for policy and governance, involve minimal administrative burdens, be attuned to other AI governance frameworks, and be reliably consistent for a range of users. In line with these key criteria, CSET has developed two alternative and complementary classification frameworks, one classifying AI systems according to their level of autonomy and impact, and the other looking at the context the system operates in, the kind of input it receives, the model it utilizes, and its output.

Helen Toner, Director of Strategy at CSET, discussed the work done at the CSET on the emerging phenomenon of AI accidents. Regulatory frameworks risk lagging behind the rapid development of AI technology. To give the policy response sufficient time to adapt, CSET has been developing tools to foresee AI-related problems before they arise. Doing so involves drawing both on past non-AI technological accidents and known weaknesses and vulnerabilities characteristic of the use of AI systems, due to encounters with unexpected input, failures to devise appropriate specifications, and difficulties involving the system’s interpretability and inability to assure users of its accuracy. Looking at these two sources, the research has identified five factors contributing to AI accidents: competitive pressure, system complexity, the speeds at which AI systems operate, untrained and distracted users, and cascading effects in multiple instance systems. In responding to these weaknesses, policymakers can focus on investments in AI safety R&D and standards and testing capacities, work across borders to reduce accident risk, and facilitate information sharing.

Summary by Daniel Maggen, ISP Visiting Fellow

AI Governance Virtual Symposium: Watching Algorithms, The Role of Civil Society (June 18, 2021)

Co-hosted by ISP & Georgetown Institute for Technology Law & Policy

Panelists: Julia Angwin, The Markup, Editor-in-Chief and Founder

Iverna McGowan, Center for Democracy & Technology, Europe Director

David Robinson, Cornell's College of Computing and Information Science, Visiting Scientist, AI Policy and Practice Initiative

Moderator: Byron Tau, Wall Street Journal, Reporter

Summary:

In this year’s concluding session of the AI Governance Virtual Symposium, Byron Tau of the Wall Street Journal led Julia Angwin, Iverna McGowen, and David Robinson in a discussion on the role of civil society in keeping the use of algorithms in check.

Julia Angwin, Founder and Editor-in-Chief of The Markup, observed the role of journalism in bringing to light the various ways in which different AI use cases affect our lives, from hiring algorithms to those used in criminal proceedings. Despite their prevalence, algorithms are prone to introducing and enhancing various biases and are nevertheless often subject to little scrutiny. Furthermore, algorithms can be used to circumvent accountability for failures which comparable human decision-making would be held accountable for.

Iverna McGowen, the Europe Director of the Center for Democracy & Technology, discussed the EU’s recently published draft AI regulations. The proposed regulation task governmental agencies with determining the level of risk posed by various AI-based systems and regulates them according to their risk level. However, this risk-based approach should not come at the expense of a rights-based approach that puts the AI’s potential effect on human rights at center stage. Civil society organizations have an essential role in ensuring that the use of AI lives up to human rights standards and the general principles of the rule of law, including transparency and fairness.

David Robinson of Cornell’s College of Computing and Information Science focused on the mechanism that can be used in the service of AI governance. Examples of such mechanisms can, for instance, be gleaned from the process of organ allocation, which has been subject over the years to various regimes of public oversight and input. The debate over algorithms can act as a moral spotlight, focusing on specific aspects of fairness. However, there are also more general questions about the very use of algorithms in different circumstances.

Summary by Daniel Maggen, ISP Visiting Fellow

AI Governance Virtual Symposium: How Do We Regulate AI? Comparative Perspectives (May 28, 2021)

Co-hosted by ISP & Georgetown Institute for Technology Law & Policy

Panelists:

Chinmayi Arun, Resident Fellow, Information Society Project, Yale Law School

Jessica L. Rich, Esq., Distinguished Fellow, Institute for Technology Law And Policy, Georgetown Law; Former Director of the Bureau of Consumer Protection, Federal Trade Commission

Lucilla Sioli, Director, Artificial Intelligence and Digital Industry, DG Connect, European Commission

Moderator: Anupam Chander, Professor of Law, Georgetown University

Summary:

In the third session of the AI Governance Virtual Symposium, Professor Anupam Chander moderated a panel on comparative perspectives on AI regulations featuring Chinmayi Arun of the Information Society Project, Jessica L. Rich of the Institute for Technology Law And Policy, and Lucilla Sioli of the European Commission.

Discussing AI regulation in the global South, Chinmayi Arun noted the Western norms and imagery imposed by major multinational companies on the global majority through data collection choices and model development. This tension is exacerbated by the fact that some countries in the majority world are not democracies and others have weak regulators. Arun discussed how this results in technologies criticized in the minority world being embraced by countries in the majority world. Lastly, Arun touched on the tension between states’ questioning of certain technologies and international agreements guaranteeing the free flow of data.

Lucilla Sioli discussed the EU’s proposed utilization of the CE product marking framework to regulate the placement of AI products on the European market. Sioli stressed that the purpose of the proposed regulation is not to regulate AI technology but rather to impose rules on using certain AI systems in specific contexts according to a scaled measurement of the system’s risk and sensitivity. In the proposed regulation, AI use cases are ranked from mundane, low-risk systems to high-risk, prohibited use cases. This scaled regulation, Sioli noted, can help address the concerns of businesses reluctant to use AI out of fear of customer objection.

Presenting the perspective from the US, Jessica Rich, former Director at the FTC, discussed the many non-binding principles and standards addressing the use of AI and requiring transparency, truthfulness, and nondiscrimination, as well the increasing number of legislative proposals on the subject. Although AI technology is not regulated in the US as a general matter, Rich noted that as a process, AI is incorporated into products and services regulated by comprehensive regulatory frameworks. However, further regulation is needed, Rich added, to ensure that corporations cannot escape accountability by assigning responsibility to an algorithm.

Summary by Daniel Maggen, ISP Visiting Fellow

AI Governance Virtual Symposium:AI Ethics & Corporate Responsibility (May 7, 2021)

Co-hosted by ISP & Georgetown Institute for Technology Law & Policy

Panelists:

Yoko Arisaka, General Manager at Sony’s Legal Department

Erika Brown Lee, SVP and Assistant GC at Mastercard

Jutta Williams, Staff Product Manager at Twitter

Moderator: Alexandra Reeve Givens, President and CEO of the Center for Democracy and Technology

Summary:

In the second session of the AI Governance Virtual Symposium, Alexandra Reeve Givens, President and CEO of the Center for Democracy and Technology, moderated a panel on AI and corporate social responsibility featuring Yoko Arisaka, General Manager at Sony’s Legal Department, Erika Brown Lee, SVP and Assistant GC at Mastercard, and Jutta Williams, Staff Product Manager at Twitter.

Discussing Sony’s ethics activities, Yoko Arisaka emphasized corporations’ duty to promote creativity and sustainability. Ethics in AI requires constantly exploring the meaning of humanity, of what we are looking for as people. Though the challenges created by AI and machine learning cannot be resolved entirely, Arisaka underscored the importance of mitigating these risks and maximizing fairness. This requires transparency about collecting personal data, providing individuals with accessible information on the AI’s use of their data. Discussing the particular challenges faced by companies with global operations, Arisaka notes the variance in different people’s sense of ethics. To meet this challenge, global companies must develop standard ethics guidelines developed in dialog with different groups, academia, industry, and the public sector.

Presenting Twitter’s Responsible Machine Learning Initiative, Jutta Williams discussed the need for public development and accountability in assessing the algorithm’s fairness. Williams discussed this initiative as resting on four pillars: taking responsibility for algorithmic decisions, equity and fairness of outcomes, transparency about decisions, and enabling user agency and algorithmic choice.

For Erika Brown Lee, corporations’ social responsibility concerning AI revolves around the question of trustworthiness, as users need to be able to trust that service providers are good stewards of their personal data. Ethical entities, Brown Lee stressed, are responsible for ensuring that individuals and their rights are honored. Individuals should own their data, control and understand how it is used, benefit from its use, and have a right to keep personal data private and secure.

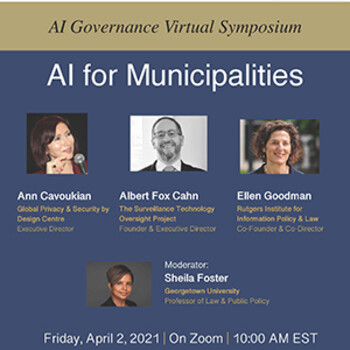

AI Governance Virtual Symposium: AI for Municipalities (April 2, 2021)

Co-hosted by ISP & Georgetown Institute for Technology Law & Policy

Panelists:

Ann Cavoukian, Executive Director, Global Privacy & Security by Design Center

Albert Fox Cahn, Founder & Executive Director, The Surveillance Technology Oversight Project

Ellen Goodman, Co-founder and Director, Rutgers Inst. for Information Policy & Law

Moderator: Sheila Foster, The Scott K. Ginsburg Professor of Urban Law and Policy; Professor of Public Policy, Georgetown University

Summary:

In the first session of the AI Governance Virtual Symposium, Professor Sheila Foster moderated a panel on AI for municipalities, featuring Dr. Ann Cavoukian, Albert Fox Cahn, and Professor Ellen Goodman. Spending on smart cities, Professor Foster noted, is likely to reach more than $130 billion this year, with AI expected to play a substantial role in their operation. This development promises to reshape many facets of city life, but it also gives rise to multiple challenges, ranging from privacy and security to the lack of transparency.

Dr. Ann Cavoukian, Executive Director of Global Privacy & Security by Design Center, discussed in her remarks the need to incorporate deidentification practices at the source of data collection in smart cities. AI is not magical, Dr. Cavoukian stressed, and transparency is essential to ensuring that privacy remains an inherent component of data gathering, as well as a safeguard against harmful and costly mistakes. Though generally optimistic about the potential benefits of smart cities, Dr. Caoukian insisted that eliminating the hidden biases endemic to AI and preventing the misuse of collected data require the ongoing ability to “look under the hood” of the systems being used.

Albert Fox Cahn, Founder and Executive Director of The Surveillance Technology Oversight Project, offered a more cautious stance on the use of AI by municipalities, drawing attention to municipalities’ tendencies to over-collect data, often beyond the collections’ original purpose. Public oversight of data collection and use, Mr. Fox Cahn warned, is hindered by the opacity of municipal procurement, compounded by the additional layers of technological complexity. In this reality, it is not always clear precisely what benefits the technology promises to produce and its efficacy in doing so. To further complicate the matter, the public allocation of the presumed benefits and of the harms these systems entail is often lopsided, with vulnerable communities bearing the brunt of the costs and enjoying little gains. Even when municipalities attempt to minimize misuse by restricting the use of collected data to its original purpose, it is often difficult to prevent the data from being turned over to state and federal authorities.

Professor Ellen Goodman, Co-founder and Director of the Rutgers Institute for Information Policy and Law, focused in her discussion on the subject of trust, noting how the failure to separate relatively mundane uses of AI from sensitive use cases can undermine public trust across the board. Exacerbating this challenge is the general concern over the role of private companies in data collection and its potentially harmful effects on democratic accountability and public participation. To gain public trust, municipalities must provide shielded data storage for their residents, protected from commercial and other interests, by employing purpose limitations and privacy controls.

Summary by Daniel Maggen, ISP Visiting Fellow

AI Governance Virtual Symposium: Interview with Minister Audrey Tang on AI (March 10, 2021)

Guest Speaker: Audrey Tang, Minister, Republic of China (Taiwan)

Interviewed by: Anupam Chander, Professor of Law, Georgetown University Law Center

Nikolas Guggenberger, Executive, Yale Information Society Project

Kyoko Yoshinaga, Non-Resident Senior Fellow, Institute for Technology Law & Policy, Georgetown University Law Center

Summary:

In the opening segment to the AI Governance Virtual Symposium, organized by the Information Society Project at Yale Law School and the Institute for Technology Law and Policy at Georgetown Law, Audrey Tang, the Digital Minister of Taiwan, discussed Taiwan’s approach to AI governance and her vision for the future of AI with Professor Anupam Chander, Nikolas Guggenberger, Kyoko Yoshinaga, and Antoine Prince Albert III.

In her inspiring remarks, Minister Tang suggested treating AI as means of increasing democracy’s “bitrate,” using the technology to foster and facilitate interpersonal relationships. Discussing the Taiwanese approach, Minister Tang stressed the need for government investment in digital infrastructure, akin to that allocated to traditional tangible infrastructures. Without such investment, Minister Tang warned, government would be forced to rely on private resources, which may not be compatible with democratic rule.

On the future of AI governance, Minister Tang discussed the importance of technological education to increasing digital competence and access to AI. Investments in digital competence are key to fostering democratic participation by transforming citizens into active media producers. Discussing the risks of reliance on AI, Minister Tang addressed the need to promptly respond to implicit biases by introducing transparency and robust feedback mechanisms. Likening the use of AI technology to fire, Minister Tang advocated introducing AI literacy at a young age, teaching children how to successfully and safely interact with AI, and implementing safety measures in public infrastructure.

Offering an optimistic view on the future of AI, Minister Tang suggested that we replace talk of AI singularity with the language of “plurality”, using AI to expand the scope of social values to include future generations and the environment. Doing so requires international cooperation in developing norms that would promote fruitful AI governance and increased opportunities for future generations.

Summary by Daniel Maggen, ISP Visiting Fellow